In recent years, the integration of Artificial Intelligence (AI) in healthcare has promised revolutionary advancements, from precision medicine to administrative efficiency. However, with great promise comes great responsibility, which revolves around accountability, responsibility, and transparency. AI in healthcare operates on algorithms that analyze vast amounts of data to make predictions, diagnoses, and treatment recommendations.

While these systems offer unprecedented capabilities, they also raise concerns about accountability.

Who is responsible if an AI algorithm makes a mistake in diagnosis or treatment?

Is it the developers, the healthcare providers, or the regulatory bodies overseeing their usage?

How much transparency is there between the AI decisions and their respective patients?

Ultimately, AI holds immense potential to improve patient outcomes and streamline processes. However, achieving this potential requires a careful balance of accountability, responsibility, and transparency.

Exploring the Role of AI Accountability in Healthcare

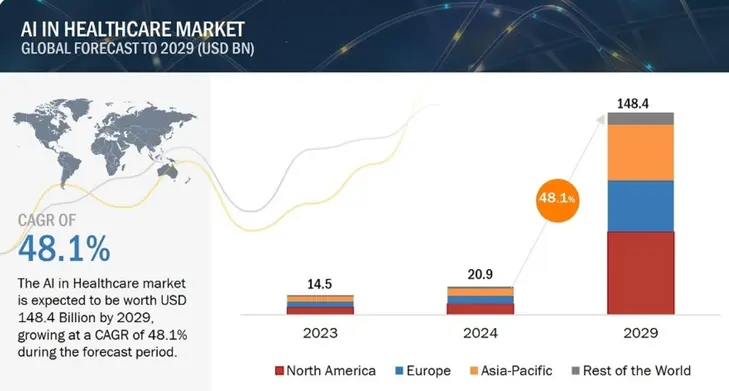

According to Markets and Markets research, in 2024, the worldwide market for AI in healthcare reached a valuation of USD 20.9 billion, with projections suggesting it will surge to USD 148.4 billion by 2029, reflecting a Compound Annual Growth Rate (CAGR) of 48.1% during the forecast period.

In the field of healthcare, the integration of AI has promised transformative advancements, from more accurate diagnoses to personalized treatment plans. However, alongside these benefits, the introduction of AI into medical practices also raises critical questions regarding accountability.

As AI algorithms become increasingly involved in decision-making processes, ensuring their accountability becomes most important to maintaining patient safety and trust in the healthcare system. Accountability offers transparency in algorithmic decision-making, responsibility for outcomes, and mechanisms for addressing errors or biases.

Healthcare providers, developers, regulators, and policymakers must collaborate to establish robust frameworks for AI accountability, consisting of rigorous testing, ongoing monitoring, and clear protocols for addressing errors or biases that may arise. Ultimately, fostering accountability not only mitigates risks but also enhances the potential of AI to revolutionize patient care, paving the way for a more efficient, equitable, and patient-centered healthcare ecosystem. Here is an example of AI accountability in the healthcare industry:

Suppose, a hospital implements an AI-powered diagnostic system with IoT to assist radiologists in interpreting medical images such as X-rays and MRIs. The AI system is designed to identify abnormalities and provide recommendations to aid in diagnosis.

Here are AI’s accountability measures in this scenario:

- Clear documentation on how the AI works.

- Strict protocols for patient data protection.

- Rigorous testing before deployment and continuous monitoring afterward.

- Radiologists retain final decision-making authority.

- Clear roles and regular reviews to ensure ethical and effective use.

These measures ensure that the AI system enhances patient care while maintaining ethical standards and minimizing risks.

Understanding AI’s Responsibility in Healthcare

Artificial Intelligence holds immense potential for transforming healthcare by enhancing diagnostics, treatment, and patient care. However, with this potential comes the critical responsibility of ensuring AI operates ethically and transparently. AI systems must be designed to prioritize patient well-being, uphold privacy and security standards, and mitigate biases that could perpetuate disparities in healthcare delivery.

Moreover, AI developers and healthcare practitioners must collaborate to establish clear guidelines and regulations governing the use of AI in healthcare to ensure accountability and trustworthiness. As AI continues to advance, it's essential to recognize its role as a tool to augment human capabilities rather than replace them entirely, emphasizing the importance of human oversight and empathy in medical decision-making.

Ultimately, by embracing AI responsibly, we can harness its potential to revolutionize healthcare while safeguarding patient interests and promoting equitable access to quality care. Again, to understand responsible AI in healthcare, understand a real life illustration to have better clarity of AI’s responsibility in healthcare:

Diabetic retinopathy is a common complication of diabetes and a leading cause of blindness worldwide. Early detection and treatment can prevent vision loss, making regular eye screenings essential for diabetic patients. However, due to the shortage of ophthalmologists and the time-consuming nature of traditional screenings, many patients do not receive timely care. In response to this challenge, researchers and developers have created AI-powered systems to assist in diabetic retinopathy screening.

Now the question arises: what can be the role and responsibility of AI-powered systems in this scenario:

The role and responsibilities of AI in this context include:

- AI can prioritize cases based on urgency, helping radiologists focus on critical cases first, thereby improving patient care and reducing waiting times.

- AI systems provide consistent interpretation regardless of factors like fatigue or experience level, ensuring a reliable standard of care across different healthcare settings.

- AI algorithms are trained to detect abnormalities or potential signs of disease in medical images with high accuracy, potentially reducing human error and oversight.

- It can serve as a second opinion or assist radiologists in making diagnoses by providing additional insights or highlighting areas that might be overlooked.

Shedding Light on the Transparency of AI in Healthcare Decision-Making

Transparency in the application of AI within the healthcare sector is quite important to ensure both the efficacy and ethical integrity of its usage. In healthcare, AI algorithms are increasingly being employed to assist in diagnosis, treatment planning, and patient care management.

However, without transparency, there is a risk of distrust among patients and healthcare professionals alike. Transparency entails not only understanding how AI algorithms arrive at their decisions but also being open about the data used to train these algorithms and any potential biases present within them.

Moreover, transparent AI systems provide explanations for their outputs, enabling healthcare providers to comprehend and trust the recommendations made by these technologies. Through transparency, stakeholders can assess the reliability and fairness of AI systems, fostering greater confidence in their integration into clinical practice. This transparency fosters accountability and facilitates continuous improvement in AI systems, ultimately enhancing patient outcomes and advancing the quality of care delivery in healthcare settings. To grasp the concept of transparency in healthcare, let's understand the scenario given below:

Imagine a scenario where an AI system is used to assist doctors in diagnosing medical conditions from radiology images, such as X-rays or MRI scans. Transparency in this context means that the AI system not only provides accurate diagnoses but also explains its reasoning in a way that clinicians can understand and trust.

A deep learning model can be used to analyze an X-ray image to detect signs of pneumonia. In a transparent AI system, the model wouldn't just provide a binary output (pneumonia detected or not detected); it would also highlight the regions of the image that contributed to its decision. This could involve generating heatmaps to show which parts of the X-ray were most indicative of pneumonia.

The transparency of AI in this context includes:

- Disclose dataset details, biases, limitations, and performance metrics with patients.

- Express the AI's confidence level or certainty in its diagnosis.

- Prioritize interpretable models for easier understanding by clinicians.

Strategies for Ensuring Accountability, Responsibility, and Transparency

The provided content outlines several key strategies for advancing AI in healthcare while ensuring transparency, trustworthiness, and ethical considerations:

Focus on Explainable AI

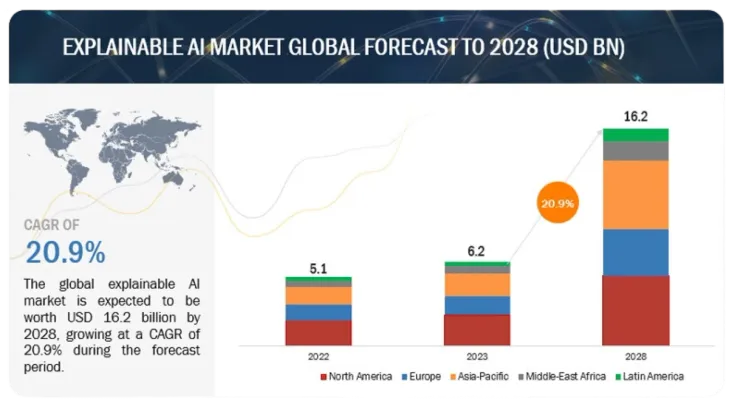

According to Markets and Markets, the Explainable AI Market is expected to expand at a rate of 20.9%, reaching a market worth of $16.2 billion by 2028. In 2023, its value stood at $6.2 billion.

Investing in research and development of explainable AI techniques is crucial for enhancing trust and acceptance of AI in healthcare. Explainable AI techniques aim to make the internal workings of AI models more interpretable and understandable to humans. By providing insights into how AI arrives at its conclusions, explainable AI techniques enable healthcare professionals to assess the reliability and validity of AI-generated insights, thereby improving decision-making processes and fostering trust in AI systems.

Open Source Development & Collaboration

Encouraging the open-source development of AI healthcare models promotes transparency and collaboration among developers and researchers. Open-source projects allow for peer review, scrutiny, and improvement by a broader community, which can lead to more robust and reliable AI solutions.

Human-in-the-Loop Approach

This approach integrates AI as a decision support tool within healthcare processes, while final decisions remain with qualified healthcare professionals. By combining human expertise with AI capabilities, this approach leverages the strengths of both, ensuring that AI augments human decision-making rather than replacing it entirely. It also provides a safeguard against potential errors or biases in AI predictions.

Education and Training

Educating healthcare professionals and the public about AI in healthcare is essential for fostering trust and understanding. This education should cover various aspects of AI, including how it works, its limitations, potential applications, and the ethical considerations involved. By promoting informed decision-making, education and training initiatives can help mitigate fears and misconceptions surrounding AI in healthcare.

Are you ready to unlock the full potential of your business with our advanced web app development services? Let's craft a captivating, user-friendly app designed specifically to meet your requirements and amplify your online presence!

Challenges in AI's Healthcare for Accountability, Responsibility, and Transparency

Data Quality

Biases in datasets, such as underrepresentation of certain demographics or overrepresentation of specific groups, can lead to skewed outcomes. Ensuring patient privacy and data confidentiality is paramount. Unauthorized access or breaches can compromise patient trust and the integrity of healthcare systems. Also, accurate labeling and annotation of medical data is crucial for training AI models. Inaccuracies or inconsistencies can lead to erroneous conclusions.

Algorithmic Bias and Fairness

AI algorithms must be designed and tested to ensure fairness across different demographic groups. Failure to do so can perpetuate existing disparities or introduce new biases. AI models may unintentionally learn and amplify biases present in training data, producing discriminatory results. Understanding how AI algorithms make decisions is essential for detecting and mitigating bias. Lack of transparency can hinder accountability and trust.

Security Breaches

As per Grand View Research, the worldwide healthcare cybersecurity market reached an estimated value of USD 17.3 billion in 2023, with a projected CAGR of 18.5% from 2024 to 2030. A rise in cyberattacks, heightened concerns over privacy and security, have increased the adoption of cutting-edge cybersecurity solutions that are driving market expansion.

Healthcare systems are prime targets for data breaches and cyberattacks due to the sensitive nature of medical information. AI applications increase the attack surface, requiring robust security measures. Malicious actors can exploit vulnerabilities in AI systems through adversarial attacks, manipulating inputs to produce incorrect outputs. Balancing the need for model interpretability with security concerns poses a challenge. Techniques to enhance security, such as encryption, can compromise interpretability.

Best Practices and Recommendations

Ensuring accountability, responsibility, and transparency in the use of AI in healthcare is crucial for maintaining trust, protecting patient safety, and promoting ethical practices. Here are some best practices and recommendations:

Establishing Guidelines and Standards

It's important to develop comprehensive guidelines and standards for the development, deployment, and utilization of AI technologies in healthcare. These guidelines should cover crucial aspects such as data privacy, security, bias mitigation, and patient consent. To ensure their effectiveness, interdisciplinary teams comprising healthcare professionals, data scientists, ethicists, and legal experts should collaborate in their formulation. Additionally, regular updates to these guidelines are essential to keep pace with technological advancements and emerging ethical concerns.

Continuous Monitoring and Evaluation

Robust monitoring systems must be implemented to continually track the performance and impact of AI systems in healthcare settings. These systems should be capable of detecting biases, errors, and adverse outcomes. Moreover, mechanisms for ongoing evaluation of AI algorithms are necessary to ensure their continued accuracy, reliability, and safety for use in clinical practice. Furthermore, fostering a culture of reporting adverse events or unintended consequences related to AI systems is crucial, with feedback being incorporated into continuous improvement processes.

Collaboration Among Stakeholders

Promote collaboration among healthcare providers, AI developers, regulators, policymakers, patients, and other stakeholders to address the myriad challenges and opportunities associated with AI in healthcare. Open dialogue and information sharing should be encouraged to promote transparency and collective problem-solving. Establishing partnerships between academia, industry, and healthcare institutions is vital to facilitating the research, development, and implementation of AI technologies in an ethical and responsible manner.

Ethical Guidelines

Develop and adhere to ethical principles that prioritize patient welfare, autonomy, justice, and beneficence in the domain of AI in healthcare. These principles should offer fairness, transparency, and accountability in the design and deployment of AI algorithms and systems. Implementing safeguards to mitigate risks associated with AI, such as algorithmic bias, discrimination, and privacy breaches, is essential. Additionally, informed consent and applicable laws should govern the use of data by AI systems in order to respect patient privacy and confidentiality.

Use Cases of Accountability, Responsibility, and Transparency in Healthcare

Here are real-life examples that illustrate how accountability, responsibility, and transparency are essential principles in the healthcare sector, contributing to improved patient outcomes, trust between stakeholders, and elevating the overall quality of fitness for human well-being.:

Accountability:

Following up on medical errors:

Healthcare providers take responsibility for their actions by acknowledging and addressing medical errors. This could involve conducting thorough investigations into the causes of errors, implementing corrective measures to prevent recurrence, and informing patients and their families about what happened and the steps being taken to prevent similar incidents in the future.

Reporting Adverse Drug Reactions:

Healthcare professionals are accountable for reporting any adverse reactions or side effects experienced by patients as a result of medication. This involves promptly documenting and reporting such incidents to appropriate regulatory authorities or internal reporting systems, contributing to the overall safety and effectiveness of medications.

Responsibility:

Nurses Double-Checking Prescriptions:

Nurses play a crucial role in ensuring patient safety by responsibly double-checking medication prescriptions before administering them. This practice helps to minimize the risk of medication errors and ensures that patients receive the correct medications in the appropriate doses.

A Patient Advocating for Themselves:

Patients also have a responsibility to advocate for themselves in healthcare settings. This may involve actively participating in treatment decisions, asking questions about their care, and reporting any concerns or discrepancies in their treatment plans to healthcare providers. By taking an active role in their own healthcare, patients contribute to better outcomes and ensure that their needs are being met.

Transparency:

Hospitals Publishing Quality Reports

Healthcare institutions demonstrate transparency by openly sharing data and information about their quality of care, patient outcomes, and safety measures. Publishing quality reports allows patients and the public to make informed decisions about where to seek healthcare services and encourages hospitals to continually strive for improvement.

Doctors Disclosing Potential Conflicts of Interest

Physicians uphold transparency by disclosing any potential conflicts of interest that may influence their medical decisions or recommendations. This could include financial relationships with pharmaceutical companies, research affiliations, or personal biases. By disclosing such information, doctors maintain trust and integrity in their relationships with patients and ensure that treatment decisions are based on the best interests of the patient.

Final Thoughts

As we look toward the future, the scope for enhancing AI's accountability, responsibility, and transparency in healthcare is both promising and essential. By 2024, advancements in artificial intelligence (AI) are expected to further revolutionize the healthcare industry, offering unprecedented opportunities to improve patient outcomes, streamline operations, and personalize care. However, the deployment of AI in such a sensitive and critical sector also necessitates a rigorous approach to ethical considerations, ensuring that these technologies are used in a manner that is accountable, responsible, and transparent.

At Solutelabs, we understand the critical role that technology plays in revolutionizing the healthcare industry. That's why we're dedicated to transforming healthcare through innovation. With our team of experienced professionals, we specialize in providing expert mobile and web development solutions designed specifically for the healthcare sector. Let's work together to build the next generation of healthcare apps that will revolutionize the healthcare sector and drive better outcomes.

Frequently Asked Questions

Have a product idea?

Talk to our experts to see how you can turn it

into an engaging, sustainable digital product.